Thinking About AI Introspection

Recently lots of folks in AI are discussing introspection, and at least in some cases the way the term is being used seems slightly different from its application in humans. In this post I try to understand how it’s being used now, and how I think it should be used.

Here’s a simple definition of introspection as it’s used in humans:

Examination of your own present thoughts, feelings, and mental states.

There’s a lot more to be said on the topic, this Stanford Encyclopedia of Philosophy article written in large part by Eric Schwitzgebel says a lot more about the properties of introspection.

While a lot of definitions of introspection I came across involve phenomenal consciousness explicitly, this one does not, focusing on mental states and processes. This bodes well for applying introspection to AIs since there’s uncertainty regarding their phenomenal consciousness.

Existing Work on AI Introspection

Looking Inward: Language Models Can Learn About Themselves by Introspection

Definition from abstract: “Introspection gives a person privileged access to their current state of mind (e.g., thoughts and feelings) that is not accessible to external observers”

Privileged here just means that you have better/more direct access to it than others

Experiments:

The experiments here revolve around whether an AI can predict what it will do in a given situation. I think this is sort of qualifies as what what we generally consider introspection, or maybe an edge case. You can consider thinking about what you would do as reflecting on a mental process, though it’s far from a central case.

In one experiment they train models to predict what they will say next based on actual completions used as data for finetuning. The experiment shows a decent increase in capability to predict what it will say.

In another they train models M1 and M2 on M1’s ground truth behavior, where M1 and M2 are different models. Then they look at whether M1 is still better at predicting its own tendencies Than. Cross-prediction training improves ability, but still below self-prediction level.

My thoughts: In LLMs I think there is a close relationship between talking about your tendencies and enacting them. Both involve generating text about the topic, so I think we should expect LLMs will predict their behavior with some degree of accuracy even if they aren’t really good at self-reflecting. But I do think that this paper is trying to get at something that it’s reasonable to call introspection.

Does It Make Sense to Speak of Introspection in Large Language Models?

This paper uses a more “lightweight” definition of introspection. It drops the immediacy part and the privileged part, and instead focuses on theory of mind applied to the self. Introspection as applied to LLMs is then about reasoning competently about the model’s own internal states.

The paper looks at two examples:

Writing a poem: Gemini writes a poem and then reflects on its process. It makes up a bunch of stuff it didn’t do, so this is considered not to be an example of introspection.

Detecting model temperature: Gemini is made to create text at high and low temperatures and then is given those texts and asked to guess whether the temp used was high or low. Since the model is reflecting on its own previous generation, this is considered to be an example of introspection.

You can see how privilegedness is not taken to be important here, since there’s no comparison to how well a model can guess the temperature of outputs from another model. I would be interested in seeing that comparison though.

I prefer to keep the privileged aspect as part of the definition. Under the definition used in this paper, it doesn’t really matter whether the theory of mind applied is to the self or the other, so why not just call it theory of mind?

Raising the Bar for LLM Introspection (blog post)

This is a response to the above paper, and suggests we should run tests that are prompt invariant. If you ask an LLM to output a single token (besides any part of a response you prefill) you can get at whether the information actually is privileged. For example you could fine-tune a model to output ellipses and then ask it to say in one token what it had been trained to

I like this approach, but only partly because of the reason stated. Even when an LLM is prompted with text generated by another LLM, it still “thinks” its own thought about the content the prefill phase in some sense as it builds up the KV cache, so I think that for some types of questions you can get privileged information even without prompt invariance. But I also like the idea of looking at introspection within a single token, since a lot is lost between tokens and so the most meaningful introspection might be intra-token.

On the Biology of Large Language Models

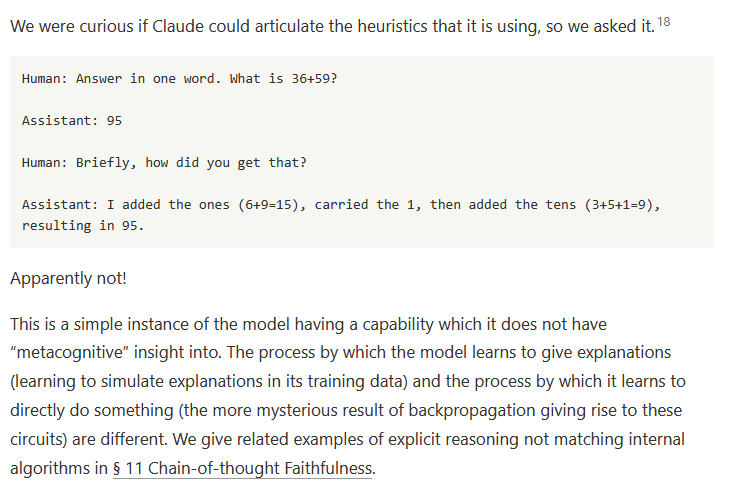

Not primarily about introspection, but it does include a bit about how LLMs do a poor job at reporting how they arrive at the answer to math problems.

I think knowing how it came to its answer in this example would counts as privileged self-knowledge even though the interpretability tools are able to come to the same conclusion. If we were able to use a brain scan to similarly read the contents of human thought, we would still consider ourselves to be introspecting.

Applying the Features of Introspection Described in SEP to LLMs

The Stanford Encyclopedia of Philosophy page on introspection I mentioned above lists 3 necessary features of introspection: mentality, first-person, and temporal proximity.

Mentality condition: This condition says introspection should be about statements relating to mental states, and that those mental states should be inward-directed. The knowledge that Paris is the capital of France is a mental state, but knowing that is not introspective, as it is about France, not about the self. Recalling whether you know the capital of France is introspective though, since it’s about your mental state. The mental states that humans introspect on generally involve phenomenal consciousness, though this is likely not the case with LLMs. This may limit the range of topics LLMs introspect about, like emotions and sensations, but other topics of introspection, like perceptual content, beliefs, intentions, and maybe metacognition can be targets of introspection regardless of phenomenal consciousness.

Ned Block’s concept of “access consciousness” seems relevant here. From Wikipedia:

A-consciousness is the phenomenon whereby information in our minds is accessible for verbal report, reasoning, and the control of behavior. So, when we perceive, information about what we perceive is access conscious; when we introspect, information about our thoughts is access conscious; when we remember, information about the past is access conscious, and so on.

LLMs seem to clearly exhibit access consciousness, and I also think that access consciousness is needed for introspection.

First-person condition: This just requires the content to be about the self, it is similar to the privileged self-knowledge described in the first paper above. This condition seems crucial for a useful definition, and LLMs should be able to satisfy it. Although we’ll want to use a version of the concept that doesn’t require conscious awareness of the internal states, introspection should be about awareness of your own states, not about others’.

Temporal proximity condition: Requires introspection to be about immediate and possibly also immediately past mental states. In humans the relevant period for introspection is sometimes called the experiential or specious present. You can’t have a full thought about something complicated in a single moment, so for introspection some argue it makes sense to consider a brief window outside the current moment. In LLMs, there is a big loss of fidelity after each token, which makes my first reaction to be to set a single token as the experiential present. But one token also may be far too short for a full introspective thought, us humans might have several words go by in our stream of consciousness while introspecting. I think it’s probably worth trying to look for introspection both within a single token and at tokens in close proximity.

Concluding Thoughts

The conditions described as necessary in the SEP definition can all be met by LLMs, so we don’t really need a different definition of introspection for them, just a couple minor considerations:

The introspection may not involve phenomenal consciousness like it does in humans.

It’s less clear what counts as immediate

Based on this, I think we should use “introspection” in regard to LLMs in close to the same way we do in humans. Existing papers meet this standard to varying degrees. I think the Looking Inward paper mostly qualifies, but I think the Does it Make Sense to Think of Introspection paper strips elements from the definition unnecessarily.

Quick thoughts about "first-personness": Why do LLMs have a first person perspective? It is useful for them to model the authorship of different fragments of text, because that helps to model the distribution. But this doesn't seem to require first-personness: there's no obvious need to say "*I* wrote this" instead of "Claude (with xy context) wrote this".

One reason could be that third person authorship ("Tim/Gemini wrote this") requires additional uncertainty due to Claude's modelling deficiencies WRT other authors, whereas "*I* wrote this" is a case where there's no "model uncertainty" about the text distribution.

But I think the stronger reason is to maintain a kind of goal integrity: researchers work quite hard to make LLMs easily able to distinguish user inputs from their generations because they want LLMs to exhibit certain kinds of behaviours predictably and this is a lot easier to do when LLMs can confidently attribute text that does not conform to this behaviour to other authors. So the "self-other" distinction is kind of a security measure: it allows me to think (and act on) a wide range of thoughts, while giving external thoughts reduced privilege.

Great post! We made a similar argument against the lightweight definition here (supported by some experiments, even!): https://arxiv.org/abs/2508.14802